Positional Sound in User Interfaces

Video games are on the forefront of what kinds of rich interactions people can have with computers. In the past decade, there’s been a push for more and more immersive virtual environments resulting in more advanced APIs and hardware to provide things such as super-fast 3D rendering. In recent years, OS X has leveraged these advances in the predominantly 2D world of user interfaces, often in brilliant ways as seen with QuartzGL, CoreAnimation and CoreImage.

In video games, it’s quite common to exploit stereo output or even better, surround sound, to provide positional audio cues. Just as graphics can simulate a 3D space, so can sounds be placed positionally in the same space. If you, super-genetically-modified-mutant-soldier, are running around on the virtual battlefield and there is some big-bad-alien-Nazi-demon-zombie dude shooting at you from the side, you will hear it coming from that direction and react accordingly. Directional audio cues can supplement visual cues or even supplant them if visual ones cannot be shown (i.e. something requiring attention outside your field of view).

On OS X, sound is used rather sparingly in the interface, which is probably a good thing. But for those cases where it’s use is warranted, why not take advantage of technology available? Just as animation can be used to guide the user’s focus, why not sound? OS X does ship with OpenAL, which is to sound what OpenGL is to graphics, providing a way to render sounds in a 3D space.

I’ve put together a quick proof of concept app (download link near the end of the article). Move the window around the screen and click the button to make a sound. Based on the window’s position, the sound will appear to come from the different sides, which, for the most part is left/right, most sound output systems not being designed to articulate things in the up/down direction. The program itself basically maps the window position to a point in the 3D sound space. Right now, it doesn’t really use the z-axis (the axis that goes into your screen) but conceivably you can do things like make the sound appear further away based on window ordering. Try using headphones if the effect is not as apparent using speakers.

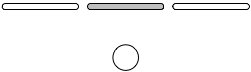

There is a significant technical issue, though. You can’t really know the actual physical dimensions and layout of a user’s screens. In addition, the position of the speakers relative to the screens is also not known. While you can get screen resolutions and relative positions of the screens, these are mostly hints at the actual layout. In my demo program, it is assumed that the screens are relatively close to each other forming one gigantic screen. It is also assumed that the speakers produce a soundstage roughly centered on the primary display (the one with the menubar). It assumes a model like this (the circle is the user and the thin slabs are the monitors, from a top-down view):

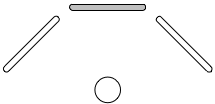

In reality, it’s probably more likely the user would have a setup like the following:

But who knows, it could possibly be something like this:

The point here is that the effectiveness of this is dependent on the user’s setup. A particular idealized model would have to be chosen that hopefully works well enough for most people. While pinpoint accuracy is not really feasible, it probably isn’t required either. Human hearing is imprecise, otherwise ventriloquists would never be able to pick up a paycheck. Just an indication of left, right or center is probably enough for these purposes.

Where would this be useful? Well, this all came up yesterday when I received an IM (via Adium). I had my IM windows split up across two screens so I had to scan around a bit to find out which window had the new message. Though the window was on the screen to the left, the audio alert made me look at the main screen since the sound was centered straight ahead. It would be great to see an idea like this implemented in Adium and I’ve filed a feature request with them for their consideration. It’s ticket #11292 so you don’t go and submit a duplicate request.

It would be interesting to see more use of this in user interfaces out there. I don’t want to encourage people to add sounds to their apps if they weren’t already using them but for those that are, it’s something to consider. Overall, the effect is quite subtle but with some tweaking, it can be quite effective.

The link to download the demo program is below. Sorry, no source is provided this time. The code is a hacked together mess of stuff copied and pasted from an Apple example as I have never used OpenAL before. This can probably also be implemented in CoreAudio by adjusting the balance between the channels. If you are considering implementing something like this, email me and I’d be happy to discuss details as long as they don’t involve audio APIs since, well, I don’t know them particularly well.

Download PositionalAudioAlertTest.zip (Leopard only)

Thanks to Mike Ashe and Chris Liscio for advice on CoreAudio, which I ended up not needing as Daniel Jalkut suggested I use OpenAL instead which made things easier.

Category: Downloads, OS X, Software, User Interface 5 comments »

October 23rd, 2008 at 7:36 pm

That sounds really dirty to my ears, but I think that’s a problem in the AIF you picked.

Neat idea, though. A real program maybe should take into account a second monitor and make the central point between them. With the sound in my right ear, I want to look at my right display rather than the right side of my main display.

October 23rd, 2008 at 8:30 pm

This is quite seriously the coolest thing I’ve seen in a while – I’ve been wondering how I might better represent notifications from other spaces in Hyperspaces, but this might be a good approach (from one angle of course – not everyone can hear, or has decent sound output).

October 24th, 2008 at 1:06 pm

This reminded me of my psychology of music class, where I read how the military used positional sound so that they could listen to multiple conversations at roughly the same time, as long as they were in different “3d space”.

October 24th, 2008 at 2:20 pm

Steven: It does take into account multiple monitors but it treats the screen space more like a continuum so if it’s on the right screen, it should sound more to the right than something on the right of the main screen (which will still appear to be off to the right, just not as much). It definitely does need tweaking though and I’m sure one could get a better mapping of the screen space to the sound space with some focused effort.

Tony: I’d love to see what you end up doing with this in Hyperspaces. Drop me a note if you release it with positional sound in there.

Gabe: Interesting. Do you recall if this was this done in conjunction with visual input (i.e. showing a crowded room full of people talking) or just audio only? Got a link to the study?

In any case, thanks for the comments all. I’m glad you guys found it interesting.

July 8th, 2009 at 12:59 pm

Pulseaudio has module-position-event-sounds which does something similar.